import torch

import torchvision

cifar_ds = torchvision.datasets.CIFAR100(root="/tmp", train=True, download=True)Files already downloaded and verifiedOn the top right corner of the page, click the drop-down arrow to the right of the Connect button and select Change runtime type.

Make sure Python 3 runtime is selected. For this part of the workshop CPU acceleration is enough.

Now we can connect to the runtime by clicking Connect. This will create a Virtual Machine (VM) with compute resources we can use for a limited amount of time.

Caution

In free Colab accounts these resources are not guaranteed and can be taken away without notice (preemptible machines).

Data stored in this runtime will be lost if not moved into other storage when the runtime is deleted.

Sub-field of Artificial Intelligence that develops methods to address tasks that require human intelligence

Classification

what is this?

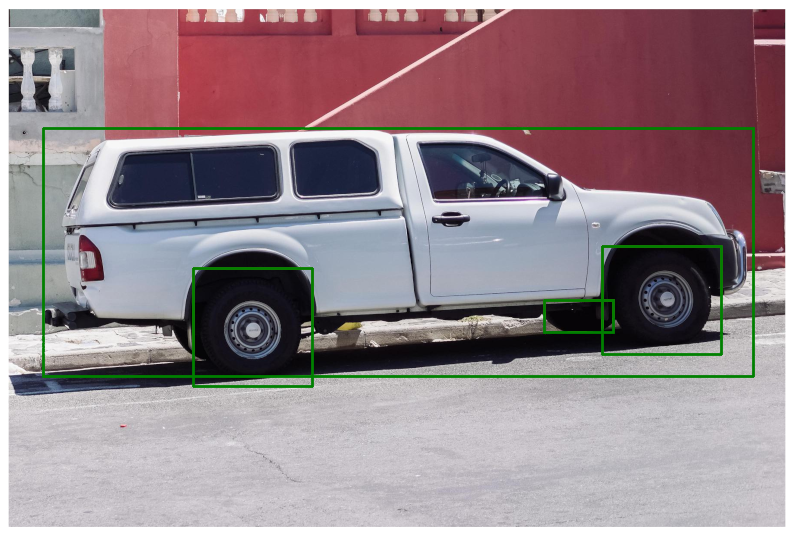

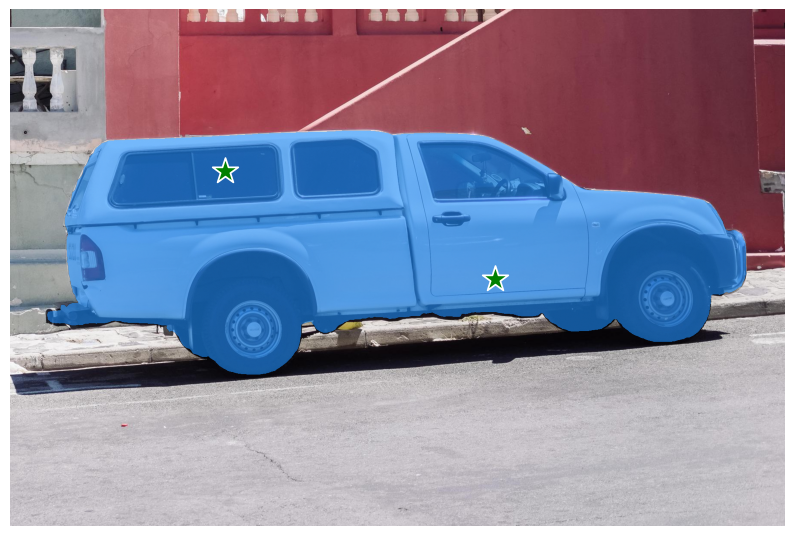

Detection

where is something?

Segmentation

where specifically is something?

Style transference

Compression of image/video/etc…

Generation of content

Language processing

Supervised

Unsupervised

Weakly supervised

Reinforced

…

For a task, we want to model the outcome/output (\(y\)) obtained by a given input (\(x\))

\(f(x) \approx y\)

Note

The complete set of (\(x\), \(y\)) pairs is known as dataset (\(X\), \(Y\)).

Note

Inputs can be virtually anything, including images, texts, video, audio, electrical signals, etc.

While outputs are expected to be some meaningful piece of information, such as a category, position, value, etc.

import torch

import torchvision

cifar_ds = torchvision.datasets.CIFAR100(root="/tmp", train=True, download=True)Files already downloaded and verifiedy = 19 (cattle)A tensor is a multi-dimensional array. In PyTorch, this comes from a generalization of the notation of variables that exists on more than two dimensions.

Tip

We can use the utilities in torchvision to convert an image from PIL to tensor

from torchvision.transforms.v2 import PILToTensor

pre_process = PILToTensor()

x = pre_process(x_im)

x = x.float()

type(x), x.shape, x.dtype, x.min(), x.max()(torch.Tensor,

torch.Size([3, 32, 32]),

torch.float32,

tensor(1.),

tensor(255.))Note

For convenience, PyTorch’s tensors have their channels axis before the spatial axes.

from torchvision.transforms.v2 import Compose, PILToTensor, ToDtype

pre_process = Compose([

PILToTensor(),

ToDtype(torch.float32, scale=True)

])

x = pre_process(x_im)

type(x), x.shape, x.dtype, x.min(), x.max()(torch.Tensor,

torch.Size([3, 32, 32]),

torch.float32,

tensor(0.0039),

tensor(1.))Note

For convenience, PyTorch’s tensors have their channels axis before the spatial axes.

The examples (\(x\), \(y\)) used to teach a machine/model to perform a task

Used to measure the performance of a model during training

This subset is not used for training the model, so it is unseen data.

This set of samples is not used when training

Its purpose is to measure the generalization capacity of the model

cifar_test_ds = torchvision.datasets.CIFAR100(root="/tmp", train=False, download=True, transform=pre_process)Files already downloaded and verifiedModels that construct knowledge in a hierarchical manner are considered deep models.

Important

We have to reshape x before feeding it to the model because x is an image with axes: Channels, Height, Width (CHW), but the Logistic Regression input should be a vector.

Models behavior depends directly on the value of their set of parameters \(\theta\).

Note

As models increase their number of parameters, they become more complex

Training is the process of optimizing the values of \(\theta\)

This is measure of the difference between the expected outputs and the predictions made by a model \(L(Y, \hat{Y})\).

Note

We look for smooth loss functions for which we can compute their gradient

In the case of regression tasks we generally use the Mean Squared Error (MSE).

\(MSE=\frac{1}{N}\sum \left(Y - \hat{Y}\right)^2\)

And for classification tasks we use the Cross Entropy (CE) function.

\(CE = -\frac{1}{N}\sum\limits_i^N\sum\limits_k^C y_{i,k} log(\hat{y_{i,k}})\)

where \(C\) is the number of classes.

Note

For the binary classification case:

\(BCE = -\frac{1}{N}\sum\limits_i^N \left(y_i log(\hat{y_i}) + (1 - y_i) log(1 - \hat{y_i})\right)\)

Note

According to the PyTorch documentation, the CrossEntropyLoss function takes as inputs the logits of the probabilities and not the probabilities themselves. So, we don’t need to squash the output of the MLP model.

Important

We are using a PyTorch loss function, and it expects PyTorch’s tensors as arguments, so we have to convert y to tensor before computing the loss function.

Gradient-based methods are able to fit large numbers of parameters when using a smooth Loss function as target.

Note

We compute the gradient of the loss function with respect to the model parameters using the chain rule from calculous. Generally, this is managed by the machine learning packages such as PyTorch and Tensorflow with a method called back propagation.

Note

To back propagate the gradients we use the loss.backward() method of the loss function.

Caution

The Gradient descent method require to obtain the Loss function for the whole training set before doing a single update.

This can be inefficient when large volumes of data are used for training the model.

These methods use a relative small sample from the training data called mini-batch at a time.

This reduces the amount of memory used for computing intermediate operations carried out during optimization process.

\(\theta^{t+1} = \theta^t - \eta \nabla_\theta L(Y_{b}, \hat{Y_{b}})\)

\(\eta\) controls the update we perform on the current parameter’s values

Note

This parameter in Deep Learning is known as the learning rate

Note

PyTorch can operate efficiently on multiple inputs at the same time. To do that, we can use a DataLoader to serve mini-batches of inputs.

Note

Gradients are accumulated on every iteration, so we need to reset the accumulator with optimizer.zero_grad() for every new batch.

Note

To perform get the new iteration’s parameter values \(\theta^{t+1}\) we use optimizer.step() to compute the update step.

Note

To extract the loss function’s value without anything else attached use loss.item().

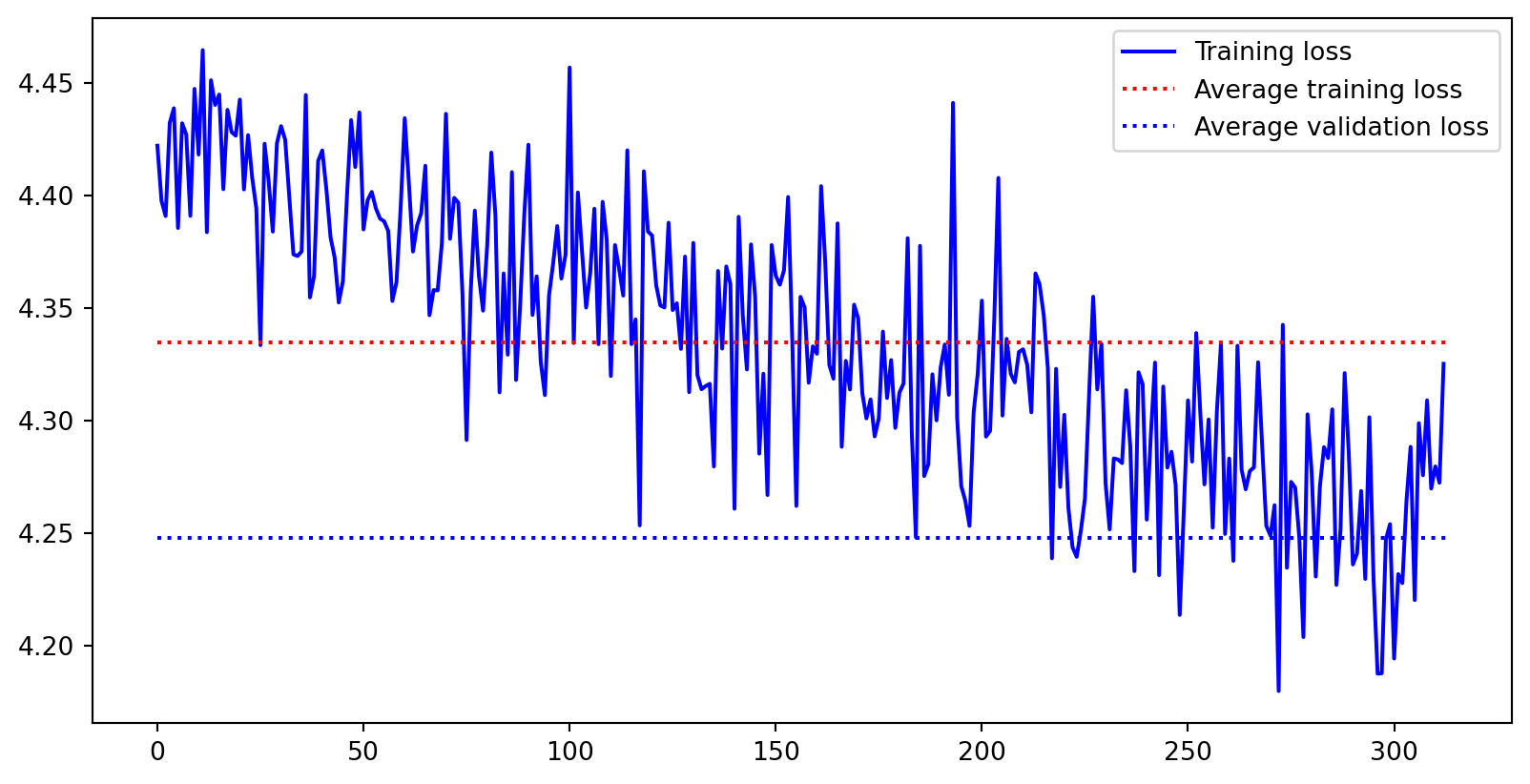

train_loss = []

train_loss_avg = 0

total_train_samples = 0

mlp_clf.train()

for x, y in cifar_train_dl:

optimizer.zero_grad()

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) ) # Reshape it into a batch of vectors

loss = loss_fun(y_hat, y)

train_loss.append(loss.item())

train_loss_avg += loss.item() * len(x)

total_train_samples += len(x)

loss.backward()

optimizer.step()

train_loss_avg /= total_train_samplesNote

Because we don’t train the model with the validation set, back-propagation and optimization steps are not needed.

Additionally, we wrap the loop with torch.no_grad() to prevent the generation of gradients that could fill the memory unnecessarily.

val_loss_avg = 0

total_val_samples = 0

mlp_clf.eval()

with torch.no_grad():

for x, y in cifar_val_dl:

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) ) # Reshape it into a batch of vectors

loss = loss_fun(y_hat, y)

val_loss_avg += loss.item() * len(x)

total_val_samples += len(x)

val_loss_avg /= total_val_samplesimport matplotlib.pyplot as plt

plt.plot(train_loss, "b-", label="Training loss")

plt.plot([0, len(train_loss)], [train_loss_avg, train_loss_avg], "r:", label="Average training loss")

plt.plot([0, len(train_loss)], [val_loss_avg, val_loss_avg], "b:", label="Average validation loss")

plt.legend()

plt.show()

num_epochs = 10

train_loss = []

val_loss = []

for e in range(num_epochs):

train_loss_avg = 0

total_train_samples = 0

mlp_clf.train()

for x, y in cifar_train_dl:

optimizer.zero_grad()

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) ) # Reshape it into a batch of vectors

loss = loss_fun(y_hat, y)

train_loss_avg += loss.item() * len(x)

total_train_samples += len(x)

loss.backward()

optimizer.step()

train_loss_avg /= total_train_samples

train_loss.append(train_loss_avg)

val_loss_avg = 0

total_val_samples = 0

mlp_clf.eval()

with torch.no_grad():

for x, y in cifar_val_dl:

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) ) # Reshape it into a batch of vectors

loss = loss_fun(y_hat, y)

val_loss_avg += loss.item() * len(x)

total_val_samples += len(x)

val_loss_avg /= total_val_samples

val_loss.append(val_loss_avg)Used to measure how good or bad a model carries out a task

\(f(x) \approx y\)

\(f(x) = y + \epsilon = \hat{y}\)

Note

The output \(\hat{y}\) is called prediction given the context taken from statistical regression analysis.

Important

Selecting the correct performance metrics depends on the training type, task, and even the distribution of the data.

from torchmetrics.classification import Accuracy

mlp_clf.eval()

train_acc_metric = Accuracy(task="multiclass", num_classes=100)

with torch.no_grad():

for x, y in cifar_train_dl:

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) )

train_acc_metric(y_hat.softmax(dim=1), y)

train_acc = train_acc_metric.compute()

print(f"Training acc={train_acc}")

train_acc_metric.reset()Training acc=0.12927499413490295val_acc_metric = Accuracy(task="multiclass", num_classes=100)

test_acc_metric = Accuracy(task="multiclass", num_classes=100)

with torch.no_grad():

for x, y in cifar_val_dl:

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) )

val_acc_metric(y_hat.softmax(dim=1), y)

val_acc = val_acc_metric.compute()

for x, y in cifar_test_dl:

y_hat = mlp_clf( x.reshape(-1, 3 * 32 * 32) )

test_acc_metric(y_hat.softmax(dim=1), y)

test_acc = test_acc_metric.compute()

print(f"Validation acc={val_acc}")

print(f"Test acc={test_acc}")

val_acc_metric.reset()

test_acc_metric.reset()Validation acc=0.125

Test acc=0.12290000170469284The most common operation in DL models for image processing are Convolution operations.

2D Convolution

The animation shows the convolution of a 7x7 pixels input image (bottom) with a 3x3 pixels kernel (moving window), that results in a 5x5 pixels output (top).

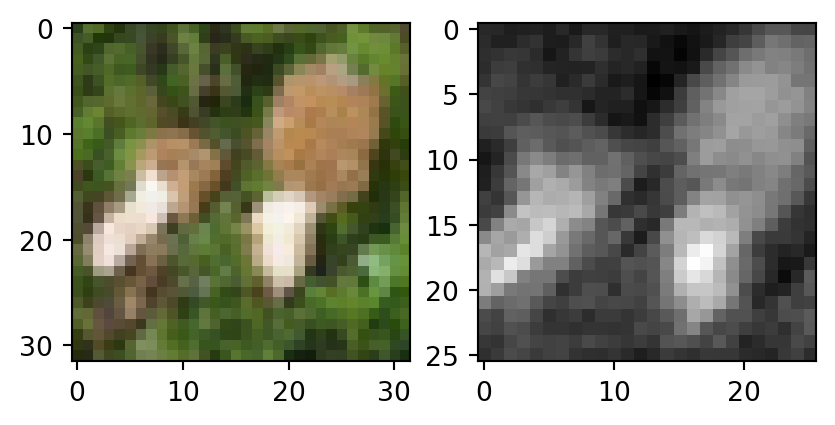

conv_1 = nn.Conv2d(in_channels=3, out_channels=1, kernel_size=7, padding=0, bias=True)

x, _ = next(iter(cifar_train_dl))

fx = conv_1(x)

type(fx), fx.dtype, fx.shape, fx.min(), fx.max()(torch.Tensor,

torch.float32,

torch.Size([128, 1, 26, 26]),

tensor(-0.1479, grad_fn=<MinBackward1>),

tensor(1.0583, grad_fn=<MaxBackward1>))Warning

The convolution layer is initialized with random values, so the results will vary.

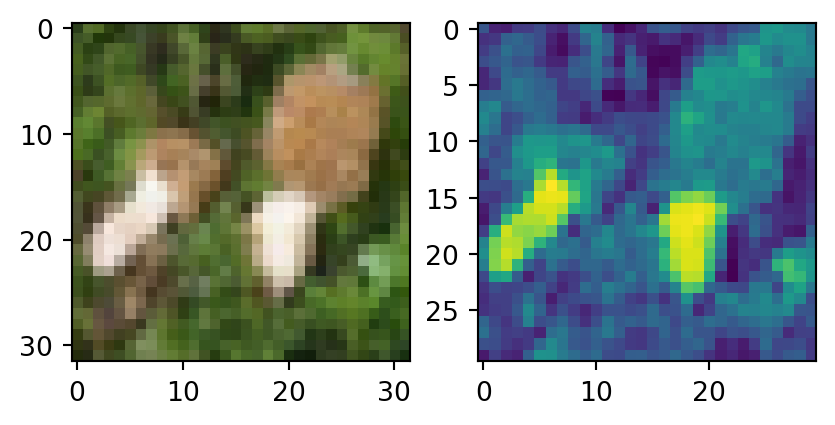

plt.rcParams['figure.figsize'] = [5, 5]

fig, ax = plt.subplots(1, 2)

ax[0].imshow(x[0].permute(1, 2, 0))

ax[1].imshow(fx.detach()[0, 0], cmap="gray")

plt.show()

Important

By default, outputs from PyTorch modules are tracked for back-propagation.

To visualize it with matplotlib we have to .detach() the tensor first.

fx = conv_1(x)

fig, ax = plt.subplots(1, 2)

ax[0].imshow(x[0].permute(1, 2, 0))

ax[1].imshow(fx.detach()[0].permute(1, 2, 0))

plt.show()

Experiment with different values and shapes of the kernel https://en.wikipedia.org/wiki/Kernel_(image_processing)

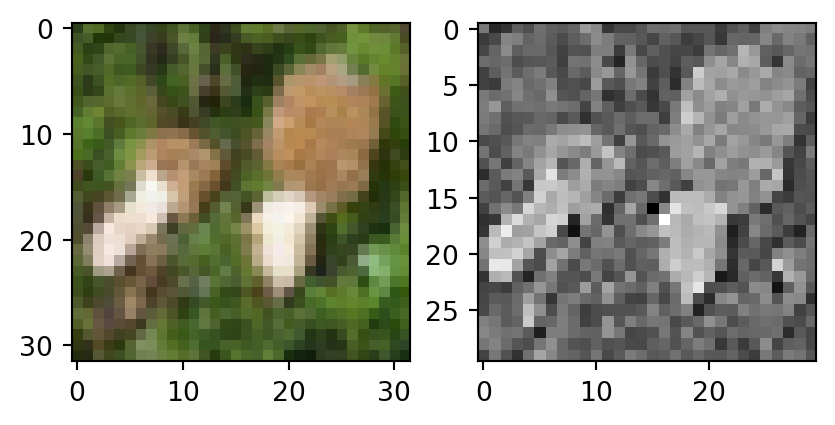

conv_1 = nn.Conv2d(in_channels=3, out_channels=1, kernel_size=3, padding=0, bias=False)

conv_1.weight.data[:] = torch.FloatTensor([

[[[0, -1, 0], [-1, 5, -1], [0, -1, 0]],

[[0, 0, 0], [0, 0, 0], [0, 0, 0]],

[[0, 0, 0], [0, 0, 0], [0, 0, 0]]]

])

fx = conv_1(x)

fig, ax = plt.subplots(1, 2)

ax[0].imshow(x[0].permute(1, 2, 0))

ax[1].imshow(fx.detach()[0, 0], cmap="gray")

plt.show()

Experiment with different values and shapes of the kernel https://en.wikipedia.org/wiki/Kernel_(image_processing)

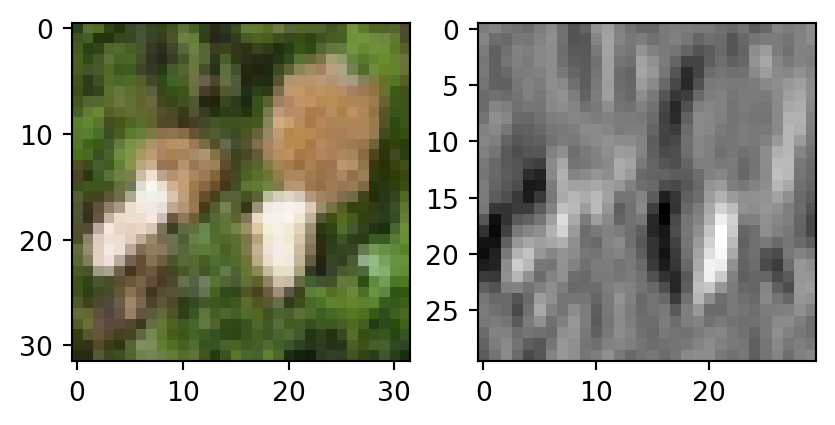

conv_1 = nn.Conv2d(in_channels=3, out_channels=1, kernel_size=3, padding=0, bias=False)

conv_1.weight.data[:] = torch.FloatTensor([

[[[1, 0, -1], [1, 0, -1], [1, 0, -1]],

[[1, 0, -1], [1, 0, -1], [1, 0, -1]],

[[1, 0, -1], [1, 0, -1], [1, 0, -1]]]

])

fx = conv_1(x)

fig, ax = plt.subplots(1, 2)

ax[0].imshow(x[0].permute(1, 2, 0))

ax[1].imshow(fx.detach()[0, 0], cmap="gray")

plt.show()

Experiment with different values and shapes of the kernel https://en.wikipedia.org/wiki/Kernel_(image_processing)

InceptionV3

U-Net

By Daniel Voigt Godoy - https://github.com/dvgodoy/dl-visuals/, CC BY 4.0, Link

By Daniel Voigt Godoy - https://github.com/dvgodoy/dl-visuals/, CC BY 4.0, Link

lenet_clf = nn.Sequential(

nn.Conv2d(in_channels=3, out_channels=6, kernel_size=5, bias=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(in_channels=6, out_channels=16, kernel_size=5, bias=True),

nn.ReLU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

nn.Linear(in_features=16*5*5, out_features=120, bias=True),

nn.ReLU(),

nn.Linear(in_features=120, out_features=84, bias=True),

nn.ReLU(),

nn.Linear(in_features=84, out_features=100, bias=True),

)Note

Pooling layers are used to downsample feature maps to summarize information from large regions.

num_epochs = 10

train_loss = []

val_loss = []

if torch.cuda.is_available():

lenet_clf.cuda()

optimizer = optim.SGD(lenet_clf.parameters(), lr=0.01)

for e in range(num_epochs):

train_loss_avg = 0

total_train_samples = 0

lenet_clf.train()

for x, y in cifar_train_dl:

optimizer.zero_grad()

if torch.cuda.is_available():

x = x.cuda()

y_hat = lenet_clf( x ).cpu()

loss = loss_fun(y_hat, y)

train_loss_avg += loss.item() * len(x)

total_train_samples += len(x)

loss.backward()

optimizer.step()

train_loss_avg /= total_train_samples

train_loss.append(train_loss_avg)

val_loss_avg = 0

total_val_samples = 0

lenet_clf.eval()

with torch.no_grad():

for x, y in cifar_val_dl:

if torch.cuda.is_available():

x = x.cuda()

y_hat = lenet_clf( x ).cpu()

loss = loss_fun(y_hat, y)

val_loss_avg += loss.item() * len(x)

total_val_samples += len(x)

val_loss_avg /= total_val_samples

val_loss.append(val_loss_avg)lenet_clf.eval()

val_acc_metric = Accuracy(task="multiclass", num_classes=100)

test_acc_metric = Accuracy(task="multiclass", num_classes=100)

train_acc_metric = Accuracy(task="multiclass", num_classes=100)

with torch.no_grad():

for x, y in cifar_train_dl:

if torch.cuda.is_available():

x = x.cuda()

y_hat = lenet_clf( x ).cpu()

train_acc_metric(y_hat.softmax(dim=1), y)

train_acc = train_acc_metric.compute()

for x, y in cifar_val_dl:

if torch.cuda.is_available():

x = x.cuda()

y_hat = lenet_clf( x ).cpu()

val_acc_metric(y_hat.softmax(dim=1), y)

val_acc = val_acc_metric.compute()

for x, y in cifar_test_dl:

if torch.cuda.is_available():

x = x.cuda()

y_hat = lenet_clf( x ).cpu()

test_acc_metric(y_hat.softmax(dim=1), y)

test_acc = test_acc_metric.compute()

print(f"Training acc={train_acc}")

print(f"Validation acc={val_acc}")

print(f"Test acc={test_acc}")

train_acc_metric.reset()

val_acc_metric.reset()

test_acc_metric.reset()Training acc=0.02437499910593033

Validation acc=0.020899999886751175

Test acc=0.02250000089406967